ResNet-50 was a state-of-the-art AI computer vision classification model in 2021. It uses deep

learning to classify (label) images accurately, identifying objects like cats, dogs, and cars.

This model is still widely used in various applications, from medical imaging to autonomous

driving.

Adversarial attacks are a technique in which images are subtly modified in a way that a human

observer cannot detect any difference, but AI models fail to recognize the correct class.

Adversarial attacks can be tuned to target specific categories, causing the model to predict the

target category inaccurately.

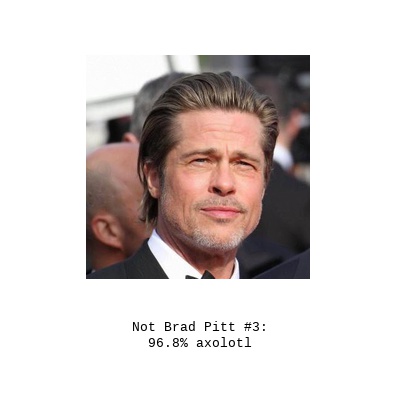

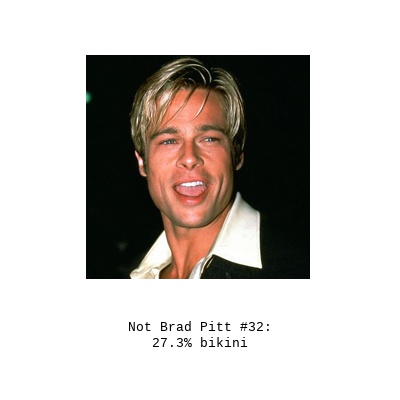

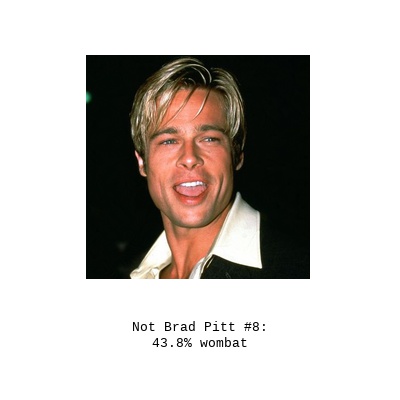

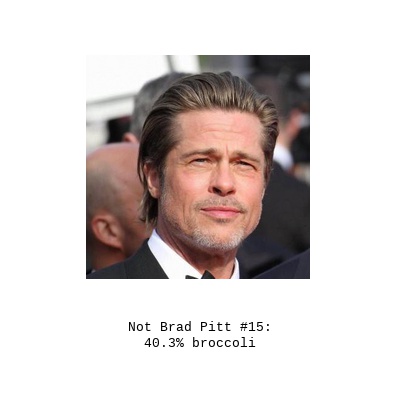

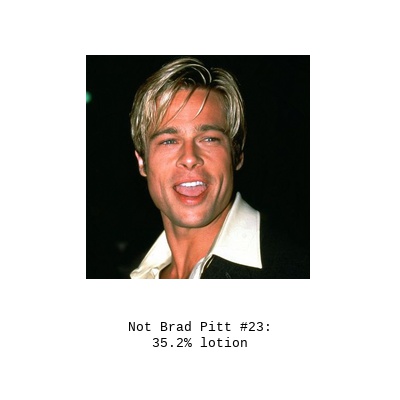

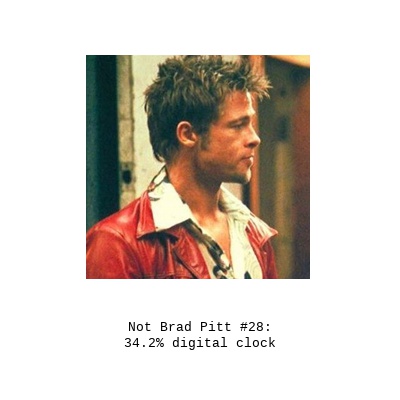

In "Brad Not-Pitt", we used an adversarial attack technique to make ResNet-50 incorrectly

classify images of Brad Pitt into a category we chose.

What if we changed all the available data on the internet so that current big models, such as

ChatGPT, would identify a broccoli as a sex symbol, or a digital clock as the anti-capitalist

Tyler Durden, while we see them the same way?

What if people are generating skewed or fake content just to try to bias new algorithms trained

on this data?

What if we are biased by data created by companies using algorithms trained on data scraped from

unreliable sources, and we accept it as the absolute truth?

Brad Not-Pitt (2021)

images that fool your AI-heart